The Modern Guide To Robots.txt

Robots.txt just turned 30 – cue the existential crisis! Like many hitting the big 3-0, it’s wondering if it’s still relevant in today’s world of AI and advanced search algorithms.

Spoiler alert: It definitely is!

Let’s take a look at how this file still plays a key role in managing how search engines crawl your site, how to leverage it correctly, and common pitfalls to avoid.

What Is A Robots.txt File?

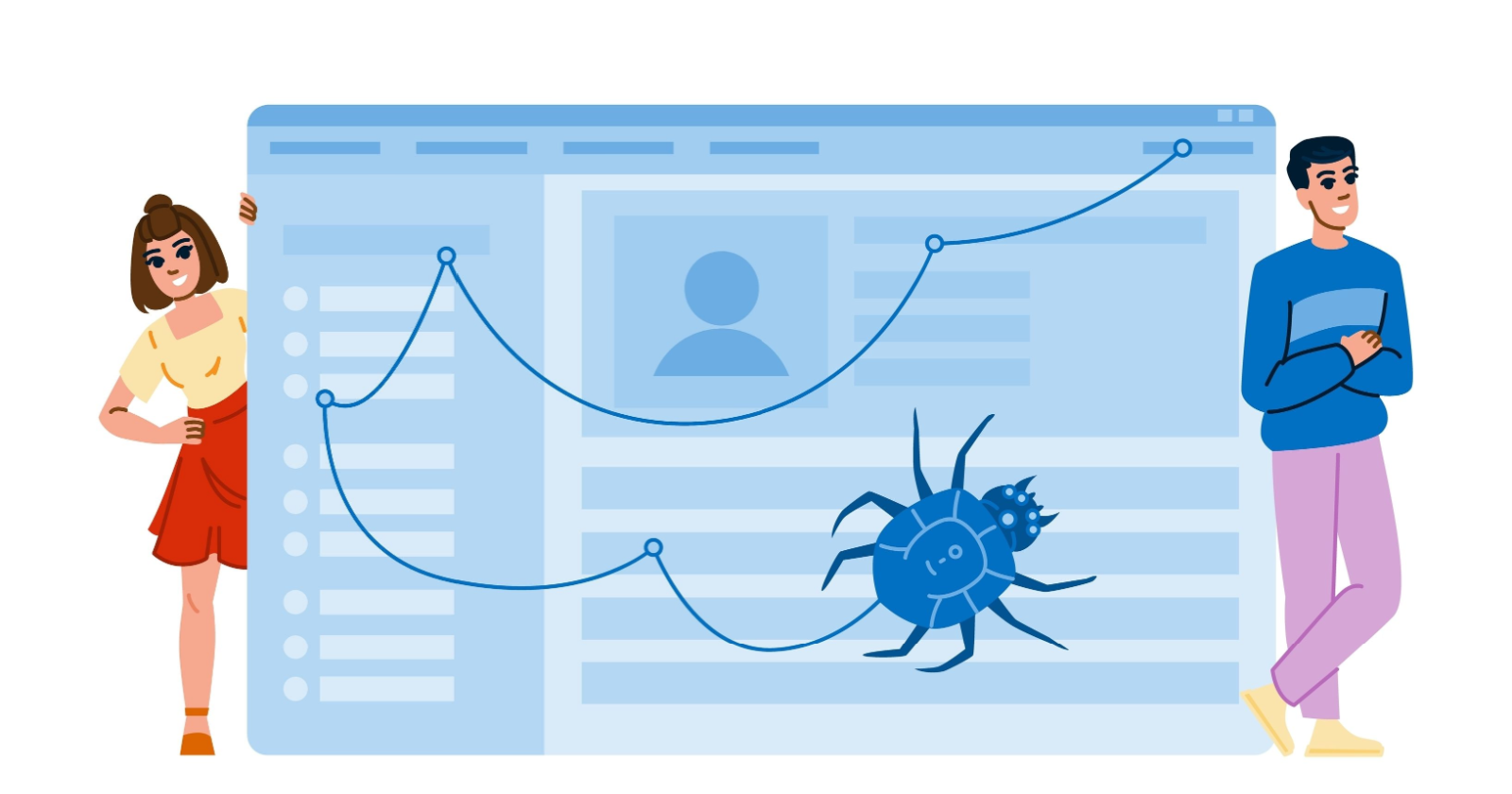

A robots.txt file provides crawlers like Googlebot and Bingbot with guidelines for crawling your site. Like a map or directory at the entrance of a museum, it acts as a set of instructions at the entrance of the website, including details on:

- What crawlers are/aren’t allowed to enter?

- Any restricted areas (pages) that shouldn’t be crawled.

- Priority pages to crawl – via the XML sitemap declaration.

Its primary role is to manage crawler access to certain areas of a website by specifying which parts of the site are “off-limits.” This helps ensure that crawlers focus on the most relevant content rather than wasting the crawl budget on low-value content.

While a robots.txt guides crawlers, it’s important to note that not all bots follow its instructions, especially malicious ones. But for most legitimate search engines, adhering to the robots.txt directives is standard practice.

What Is Included In A Robots.txt File?

Robots.txt files consist of lines of directives for search engine crawlers and other bots.

Valid lines in a robots.txt file consist of a field, a colon, and a value.

Robots.txt files also commonly include blank lines to improve readability and comments to help website owners keep track of directives.

To get a better understanding of what is typically included in a robots.txt file and how different sites leverage it, I looked at robots.txt files for 60 domains with a high share of voice across various industries.

Excluding comments and blank lines, the average number of lines across 60 robots.txt files was 152.

All sites analyzed include the fields user-agent and disallow within their robots.txt files, and a majority of sites also included a sitemap declaration with the field sitemap.

Fields like allow and crawl-delay were used by some sites, but less frequently.

Robots.txt Syntax

Now that we’ve covered what types of fields are typically included in a robots.txt, we can dive deeper into what each one means and how to use it.

For more information on robots.txt syntax and how it is interpreted by Google, check out Google’s robots.txt documentation.

User-Agent

The user-agent field specifies what crawler the directives (disallow, allow) apply to. You can use the user-agent field to create rules that apply to specific bots/crawlers or use a wild card to indicate rules that apply to all crawlers.

For example, the below syntax indicates that any of the following directives only apply to Googlebot.

user-agent: Googlebot

If you want to create rules that apply to all crawlers, you can use a wildcard instead of naming a specific crawler.

user-agent: *

You can include multiple user-agent fields within your robots.txt to provide specific rules for different crawlers or groups of crawlers.

Disallow And Allow

The disallow field specifies paths that designated crawlers should not access. The allow field specifies paths that designated crawlers can access.

Because Googlebot and other crawlers will assume they can access any URLs that aren’t specifically disallowed, many sites keep it simple and only specify what paths should not be accessed using the disallow field.

If you’re using both allow and disallow fields within your robots.txt, make sure to read the section on order of precedence for rules in Google’s documentation.

Generally, in the case of conflicting rules, Google will use the more specific rule.

Note that if there is no path specified for the allow or disallow fields, the rule will be ignored.

This is very different from only including a forward slash (/) as the value for the disallow field, which would match the root domain and any lower-level URL (translation: every page on your site).

If you want your site to show up in search results, make sure you don’t have the following code. It will block all search engines from crawling all pages on your site.

This might seem obvious, but believe me, I’ve seen it happen.

URL Paths

URL paths are the portion of the URL after the protocol, subdomain, and domain beginning with a forward slash (/).

URL paths are case-sensitive, so be sure to double-check that the use of capitals and lower cases in the robot.txt aligns with the intended URL path.

Special Characters

Google, Bing, and other major search engines also support a limited number of special characters to help match URL paths.

A special character is a symbol that has a unique function or meaning instead of just representing a regular letter or number.

The special characters supported by Google in robots.txt are:

– Asterisk (*) – matches 0 or more instances of any character.

– Dollar sign ($) – designates the end of the URL. The rule specified for the crawler named FastCrawlingBot is to wait five seconds after each request before requesting another URL. However, Google does not support the crawl-delay field and will ignore it. Other search engines like Bing and Yahoo do respect crawl-delay directives for their web crawlers. Blocking APIs helps protect their usage and reduce unnecessary server load from bots trying to access them. This also includes blocking “bad” bots that engage in unwanted or malicious activities, as well as AI crawlers that may raise privacy concerns. By auditing and optimizing your robots.txt file, you can ensure that important pages and resources are accessible to search engines while avoiding common pitfalls that could harm your site’s visibility.

Robots.txt is a crucial tool that guides search engine crawlers on which areas of a website to access or avoid. By using this file, website owners can optimize crawl efficiency by directing crawlers to focus on high-value pages.

TL;DR

- A robots.txt file is used to guide search engine crawlers, specifying which areas of a website to access or avoid.

- Key fields in the robots.txt file include “User-agent” for specifying the target crawler, “Disallow” for restricted areas, and “Sitemap” for priority pages. Additional directives like “Allow” and “Crawl-delay” can also be included.

- Websites commonly use robots.txt to block internal search results, low-value pages (such as filters and sort options), and sensitive areas like checkout pages and APIs.

- Some websites are blocking AI crawlers like GPTBot, but this may not be the best strategy for sites looking to increase traffic from various sources. Consider allowing OAI-SearchBot at a minimum for maximum site visibility.

- To ensure success, each subdomain should have its own robots.txt file, directives should be tested before publishing, an XML sitemap declaration should be included, and key content should not be accidentally blocked.

More resources:

Featured Image: Se_vector/Shutterstock

Frequently Asked Questions

1. What is the purpose of a robots.txt file?

A robots.txt file guides search engine crawlers on which areas of a website to access or avoid, optimizing crawl efficiency.

2. What are the key fields in a robots.txt file?

The key fields include “User-agent” for specifying the target crawler, “Disallow” for restricted areas, and “Sitemap” for priority pages. Additional directives like “Allow” and “Crawl-delay” can also be included.

3. Why do websites commonly use robots.txt?

Websites use robots.txt to block internal search results, low-value pages, and sensitive areas to focus crawlers on high-value content.

4. Should websites block AI crawlers like GPTBot?

While some websites block AI crawlers, it may not be the best strategy for sites looking to increase traffic from various sources. Consider allowing OAI-SearchBot for maximum site visibility.

5. How can website owners set their site up for success with robots.txt?

Website owners should ensure each subdomain has its own robots.txt file, test directives before publishing, include an XML sitemap declaration, and avoid accidentally blocking key content.

given sentence in an active voice:

The paper was written by the student.

The student wrote the paper.